What is speech to text software?

Speech to text software that’s used for translating spoken words into a written format. This process is also known as speech recognition or computer speech recognition. There are many applications, tools, and devices that can transcribe audio in real-time so it can be displayed and acted upon accordingly.

What is the Current State of Speech Recognition?

Recent technological developments in the area of speech recognition not only made our life more convenient and our workflow more productive, but also open opportunities, that were deemed as “miraculous” back in the days.

Speech-to-text software has a wide variety of applications, and the list continues to grow on a yearly basis. Healthcare, improved customer service, qualitative research, journalism – these are just some of the industries, where voice-to-text conversion has already become a major game-changer.

Why Do We Need Speech to Text software?

1. It reduces the time to transcribe content

Professionals, students, and researchers in various industries use high-quality transcripts to perform their work-related activities. The technology behind the voice recognition advances at a fast pace, making it quicker, cheaper and more convenient than transcribing content manually.

Current speech to text software isn’t as accurate as professional transcriber, but depending on the audio quality – the software can be up to 85% accurate.

2. Speech to text software makes audio accessible

Why is Speech to Text Recognition currently booming here in Europe? The answer is quite simple – digital accessibility. As described in the EU Directive 2016/2102, governments must take measures to ensure that everyone has equal access to information. Podcasts, videos and audio recordings need to be supplied with captions or transcripts to be accessible by people with hearing disabilities.

How is speech to text software used in different industries?

Speech to text technology is no longer just a convenience for everyday people; it’s being adopted by major industries like marketing, banking, and healthcare. Voice recognition applications are changing the way people work by making simple tasks more efficient and complex tasks possible.

Customer Support call analytics

Machine-made transcription is a tool that helps you understand customer conversations, so you can make changes to improve customer engagement. This service also makes your customer service team more productive.

Media and broadcasting subtitling

Speech to text software helps to create subtitles for videos and allows them to be watched by people that are deaf or hard of hearing. Adding subtitles to videos makes them accessible to wider audiences.

Healthcare

With transcription, medical professionals can record clinical conversations into electronic health record systems for fast and simple analysis. In healthcare, this process also helps improve efficiency by providing immediate access to information and inputting data.

Legal

Speech to text software helps in the legal transcription process of automatically writing or typing out often lengthy legal documents from an audio and/or video recording. This involves transforming the recorded information into a written format that is easily navigated.

Education

Utilizing speech to text can be a beneficial way for students to take notes and interact with their lectures. With the ability to highlight and underline important parts of the lecture, they can easily go back and review information before exams. Students who are deaf or hard of hearing also find this software helpful as it caption online classes or seminars.

How Does Speech to Text Software Work?

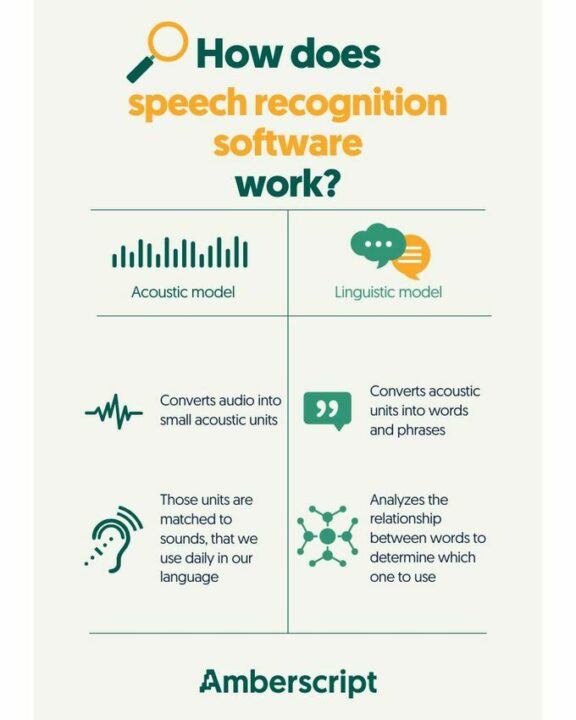

The core of a speech to text service is the automatic speech recognition system. The systems are composed of acoustic and linguistic components running on one or several computers.

What is speech to text acoustic model?

The acoustic component is responsible of converting the audio in your file into a sequence of acoustic units – super small sound samples. Have you ever seen a waveform of the sound? That’s we call analogue sound or vibrations that you create when you speak – they are converted to digital signals, so that the software can analyze them. Then, mentioned acoustic units are matched to existing “phonemes” – those are the sounds that we use in our language to form meaningful expressions.

What is speech to text linguistic model?

Thereafter, the linguistic component is responsible of converting these sequence of acoustic units into words, phrases, and paragraphs. There are many words that sound similar, but mean entirely different things, such as peace and piece.

The linguistic component analyzes all the preceding words and their relationship to estimate the probability which word should be used next. Geeks call these “Hidden Markov Models” – they are widely used in all speech recognition software. That’s how speech recognition engines are able to determine parts of speech and word endings (with varied success).

Example: he listens to a podcast. Even if the sound “s” in the word “listens” is barely pronounced, the linguistic component can still determine that the word should be spelled with “s”, because it was preceded by “he”.

Before you are able to use an automatic transcription service, these components must be trained appropriately to understand a specific language. Both, the acoustic part of your content, that is, how it is being spoken and recorded, and the linguistic part, that is, what is being said, are critical for the resulting accuracy of the transcription.

Here at Amberscript, we are constantly improving our acoustic and linguistic components in order to perfect our speech recognition engine.

What is a speaker dependent speech to text model?

There is also something called a “speaker model”. Speech recognition software can be either speaker-dependent or speaker-independent.

Speaker-dependent model is trained for one particular voice, such as speech-to-text solution by Dragon. You can also train Siri, Google and Cortana to only recognize your own voice (in other words, you’re making the voice assistant speaker-dependent).

It usually results in a higher accuracy for your particular use case, but does require time to train the model to understand your voice. Furthermore, the speaker-dependent model is not flexible and can’t be used reliably in many settings, such as conferences.

You’ve probably guessed it – speaker-independent model can recognize many different voices without any training. That’s what we currently use in our software at Amberscript

What Makes Amberscript’s Speech to to Text Engine the best?

Our voice recognition engine is estimated to reach up to 95% accuracy – this level of quality was previously unknown to the Dutch market. We would be more than happy to share, where this unmatched performance comes from:

- Smart architecturing and modelling. We are proud to work with a team of talented speech scientists that developed a sophisticated language model, that is open for continuous improvement.

- Big amounts of training material. Speech-to-text software relies on machine learning. In other words, the more data you feed the system with – the better it performs. We’ve collected terabytes of data on the way to get to such a high quality level.

- Balanced data. In order to perfect our algorithm, we used various sorts of data. Our specialists obtained a sufficient sample size for both genders, as well as different accents and tones of voice.

- Scenario exploration. We have tested our model in various acoustic conditions to ensure stable performance in different recording settings.

Natural Language Understanding – The Next Big Thing in voice to text

Let’s discuss the next major step forward for the entire industry, that is – Natural Language Understanding (or NLU). It is a branch of Artificial Intelligence, that explores how machines can understand and interpret human language. Natural Language Understanding allows the speech recognition technology to not only transcribe human language but actually understand the meaning behind it. In other words, adding NLU algorithms is like adding a brain to a speech-to-text converter.

NLU aims to face the toughest challenge of speech recognition – understanding and working with unique context.

What Can You Do with Natural Language Understanding?

- Machine translation. That’s something that is already being used in Skype. You speak in one language, and your voice is automatically transcribed to text in a different language. You can treat it as the next level of Google Translate. This alone has enormous potential – just imagine how much easier it becomes to communicate with people who don’t speak your language.

- Document summarization. We live in a world full of data. Perhaps, there is too much information out there. Imagine having an instant summary of an article, essay, or email.

- Content categorization. Similar to a previous point, content can be brought down into distinctive themes or topics. This feature is already implemented in search engines, such as Google and YouTube.

- Sentiment analysis. This technique is aimed at identifying human perceptions and opinions through a systematic analysis of blogs, reviews, or even tweets. This practice is already implemented by many firms, particularly those that are active on social media.

Yes, we’re heading there! We don’t know whether we’re gonna end up in a world full of friendly robots or the one from Matrix, but machines can already understand basic human emotions.

- Plagiarism detection. Simple plagiarism tools only check whether a piece of content is a direct copy. Advanced software like Turnitin can already detect whether the same content was paraphrased, making plagiarism detection a lot more accurate.

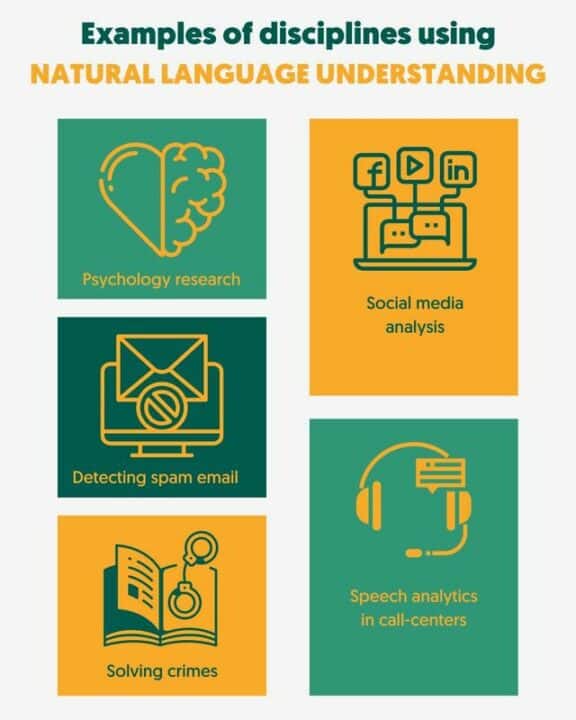

Where is NLU Applied These Days?

There are many disciplines, in which NLU (as a subset of Natural Language Processing) already plays a huge role. Here are some examples:

What’s the future of Natural Language Processing?

We’re currently integrating NLU algorithms in our speech to text software to make our speech recognition software even smarter and applicable in a wider range of applications.

We hope that now you’re a bit more acquainted with the fascinating field of speech recognition!

3) The ultimate level of speech recognition is based on artificial neural networks – essentially it gives the engine a possibility to learn and self-improve. Google’s, Microsoft’s, as well as our engine is powered by machine learning.